Google Voice Download

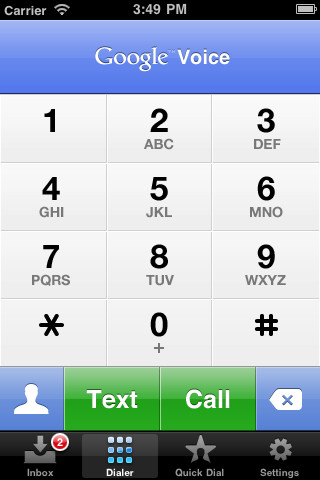

Jun 24, 2017. Access your Google Voice account right from your iPhone, iPad and iPod Touch. Send free SMS messages to US phones and make international calls at very low rates. Get transcribed voicemail—save time by reading instead of listening. Make calls with your Google Voice number. A free Google Voice. Download Google Voice for Windows. One place to make calls in your computer with Google Voice.

Troubleshooting Tips • A red LED on the Raspberry Pi near the power connector should light. If it doesn't, unplug the power, unplug the connector to the microphone, and power-up again. If it lights after powering-up without the microphone, then the microphone board may be defective. • The lamp in the button will not light up until you run a demo, so don't worry that it's off. (This is different from the AIY Essentials Guide, which describes an older software version.) • If you don't see anything on your monitor, make sure the HDMI and power cables are fully inserted into the Raspberry Pi.

• If you see 'Openbox Syntax Error', you'll need to and try booting the device again. User’s Guide. Go to the panel.

Make sure to log in with the same Google account as before. • Turn on the following: • Web and app activity • Device information • Voice and audio activity • You’re ready to turn it on: follow the under below • You can also SSH from another computer. You’ll need to use ssh -X to handle authentication through the browser when starting the example for the first time. • Authorize access to the Google Assistant API, when prompted • Make sure you're following the the first time - if you run as a service, you won't be prompted for authorization. • Try an example query like 'how many ounces in 2 cups' or 'what's on my calendar?' -- and the Assistant should respond! • If the voice recognizer doesn't respond to your button presses or queries, you may need to restart.

• If the response is Actually, there are some basic settings that need your permission first., perform step 8 again, being sure to use the same account that you used for the authorization step. We provide three demo apps that showcase voice recognition and Google Assistant with different capabilities. They may be used as templates to create your own apps.

When a demo app is running, the LED inside the arcade button and the LED in the center of the Voice Hat will pulse every few seconds. If you don’t see the LED pulse, check the. Demo App Description Raspberry Pi supported assistant_library_demo.py Showcases the Google Assistant Library and hotword detection ('Okay, Google').

2b, 3b assistant_grpc_demo.py Showcases the Google gRPC APIs and button trigger. 2b, 3b, Zero W cloud_speech_demo.py Showcases the Google Cloud Speech APIs, button trigger, and custom voice commands. 2b, 3b, Zero W 3.1.

Start the assistant library demo app. Please see the table below for a list of modules available for develope use. The full APIs are available on GitHub:. Module APIs Provided Description & Uses in Demo Apps get_button() get_led() get_status_ui() For controlling the Arcade button and the LED. See uses in any demo app.

Get_player() get_recorder() record_to_wave() play_wave() play_audio() say() For controlling the microphone and speaker. It is capable of speaking some text or playing a wave file. See uses in assistant_grpc_demo.py and cloud_speech_demo.py. Get_recognizer() For accessing the Google CloudSpeech APIs.

See uses in cloud_speech_demo.py. Set_locale_dir() set_language_code() get_language_code() For customizing the language and locale.

Not used directly by demo apps. Some APIs depend on this module. For example, aiy.audio.say() uses this module for speech synthesis.

Get_assistant() For accessing the Google Assistant APIs via gRPC. Warhammer Pdf Codex. See uses in assistant_grpc_demo.py. This is the official Google Assistant Library for Python. Create a new activation trigger.

An activation trigger is a general term describing the condition on which we activate voice recognition or start a conversation with the Google Assistant. Previously you have seen two different types of activation triggers: • Voice activation trigger This is the 'Okay, Google' hotword detection in the assistant library demo. The assistant library continuously monitors the microphones on your VoiceHat. As soon as it detects that you said 'Okay, Google', a conversation is started.

• Button trigger This is when you press the arcade button. Internally, it is connected to the GPIO on the Raspberry Pi (take a look at the driver code: aiy._drivers._button). You may design and implement your own triggers.

For example, you may have a motion detection sensor driver that can call a function when motion is detected. # ========================================= # Makers! Implement your own actions here. # ========================================= import aiy.audio import aiy.cloudspeech import aiy.voice def main (): ''Start voice recognition when motion is detected.' ' my_motion_detector = MotionDetector () recognizer = aiy.

Get_recognizer () aiy. Get_recorder (). Start () while True: my_motion_detector. WaitForMotion () text = recognizer. Recognize () aiy. Say ( 'You said ', text ) if __name__ == '__main__': main () 1.4. Use the Google Assistant library with a button.

In the User's Guide, you learned to use the Google Assistant library to make Voice Kit into your own Google Home. Sometimes, we also want to use an external trigger to start a conversation with the Google Assistant. Example external triggers include the default button (GPIO trigger, demonstrated in cloud_speech_demo.py and assistant_grpc_demo.py), a motion sensor, or a clap trigger. This section shows how to start a conversation with a button press. It is little trickier because of the way the assistant library works. If you are new to programming, you may skip the 'Design' section and jump to the 'Implementation' subsection. Design Each python app has a main thread, which executes your code in main.

For example, all our demo apps contain the following code. Def on_button_press ( _ ): assistant. Start_converstation ().

Get_button (). On_press ( on_button_press ) for event in assistant. Start (): process_event ( event ) Save it as my_demo.py, run it in the terminal, and press the button. Nothing happened.

This is actually because the assistant library's event loop blocks the main thread, so the internal event loop inside the button driver does not get to run. For more details, you may take a look how the button driver works (see src/aiy/_drivers/_button.py).

To summarize, the button driver runs an internal event loop (from the stock GPIO driver) in the main thread. And assistant library also runs an event loop that blocks the main thread. To solve this problem and allow both event loops to run successfully, we need to use the powerful threading library in Python and run the assistant library event loop in a separate thread. For more information on Python threading, take a look. Implementation The source code for a working demo is at: src/assistant_library_with_button_demo.py We created a class MyAssistant to capture all the logic.

In its constructor, we created the thread that will be used to run the assistant library event loop. Def _run_task ( self ): credentials = aiy. Get_assistant_credentials () with Assistant ( credentials ) as assistant: # Save assistant as self._assistant, so later the button press handler can use # it.

_assistant = assistant for event in assistant. Start (): self. _process_event ( event ) We have yet to hook up the button trigger at this point, because we want to wait until the Google Assistant is fully ready. In the 'self._process_event' function, we enabled the button trigger when the API tells us it is ready to accept conversations. Why do I need to turn on billing? The voice recognizer cube uses Google’s Cloud Speech API.

If you use it for less than 60 minutes a month, it’s free. Beyond that the cost is $0.006 for 15 seconds. Don’t worry: you’ll get a reminder if you go over your free limit. • In the Cloud Console, open the navigation menu • Click Billing • If you don’t have a billing account, then click New billing account and go through the setup • Return to the, then click the My projects tab. • Find the name of your new project.

Make sure it’s connected to a billing account. ''A demo of the Google CloudSpeech recognizer.' ' import os import aiy.audio import aiy.cloudspeech import aiy.voicehat def main (): recognizer = aiy. Get_recognizer () recognizer. Expect_phrase ( 'turn off the light' ) recognizer. Expect_phrase ( 'turn on the light' ) recognizer.

Expect_phrase ( 'blink' ) recognizer. Expect_phrase ( 'repeat after me' ) button = aiy. Get_button () led = aiy. Get_led () aiy. Get_recorder (). Start () while True: print ( 'Press the button and speak' ) button. Wait_for_press () print ( 'Listening.'

) text = recognizer. Recognize () if text is None: print ( 'Sorry, I did not hear you.' ) else: print ( 'You said ', text, '' ) if 'turn on the light' in text: led. Set_state ( aiy. ON ) elif 'turn off the light' in text: led.

Set_state ( aiy. OFF ) elif 'blink' in text: led. Set_state ( aiy. BLINK ) elif 'repeat after me' in text: to_repeat = text. Replace ( 'repeat after me', ', 1 ) aiy. Say ( to_repeat ) elif 'goodbye' in text: os.

_exit ( 0 ) if __name__ == '__main__': main () You may add more voice commands. Several ideas include a 'time' command to make it speak out the current time or commands to control your smart light bulbs. Run your app automatically.

Imagine you have customized an app with your own triggers and the Google Assistant library. It is an AIY-version of a personalized Google Home.

Now you want to run the app automatically when your Raspberry Pi starts. All you have to do is make a system service (like the status-led service mentioned in the user's guide) and enable it. Assuming that your app is src/my_assistant.py. We would like to make a system service called 'my_assistant'. First, it is always a good idea to test your app and makes sure it works to your expectation. Then you need a systemd config file.

Open your favorite text editor and save the following content as my_assistant.service. Description=My awesome assistant app [Service] ExecStart=/bin/bash -c '/home/pi/AIY-voice-kit-python/env/bin/python3 -u src/my_assistant.py' WorkingDirectory=/home/pi/AIY-voice-kit-python Restart=always User=pi [Install] WantedBy=multi-user.target The config file is explained below. Line Explanation Description= A textual description of the service. ExecStart= The target executable to run.

In this case, it executes the python3 interpreter and runs your my_assistant.py app. WorkingDirectory= The directory your app will be working in. By default, we use /home/pi/AIY-voice-kit-python. If you are working as a different user, please update the path accordingly.

Note shortcuts files do not support $HOME, so you have to explicitly use /home/pi/. Restart= Here we specify that the service should always be restarted should there be an error. User= The user to run the script. By default we use the 'pi' user. If you are working as a different user, please update accordingly.

WantedBy= Part of the dependency specification in systemd configuration. You just need to use this value here. For more details on systemd configuration, please consult its. We also need to move the file to the correct location, so systemd can make use of it. To do so, move the file with the following command: sudo mv my_assistant.service /lib/systemd/system/ Now your service has been configured! To enable your service, enter: sudo systemctl enable my_assistant.service Note how we are referring to the service by its service name, not the name of the script it runs. To disable your service, enter: sudo systemctl disable my_assistant.service To manually start your service, enter: sudo service my_assistant start To manually stop your service, enter: sudo service my_assistant stop To check the status of your service, enter: sudo service my_assistant status 3.5.

Use TensorFlow on device. Want to learn how to use your Voice Kit to control other IoT devices? You can start here with a (a Wi-Fi development kit for IoT projects) and (a tool for creating conversational interfaces). This tutorial will show how to make your Voice Kit communicate with Dialogflow (and Actions on Google) to control an LED light with the Photon by voice. Get all the code for this example. What's included This example ties together multiple technology platforms, so there are a few separate components included in this repo: • dialogflow-agent - an for Dialogflow • dialogflow-webhook - a web app to parse and react to the Dialogflow agent's webhook • particle-photon - a Photon app to handle web requests, and to turn the light on and off We've included two separate web app implementations.

Choose (and build on) the one that best suits your preferences: • 1-firebase-functions - a -oriented implementation, built for deployment to — a serverless, on-demand platform • 2-app-engine - a server-based implementation, designed to run on (or your server of choice) This should be enough to get you started and on to building great things! Free Download Program Car Modelling Maya Pdf Exporter. What you'll need We’ll build our web app with Node.js, and will rely on some libraries to make life easier: • The • The On the hardware side, you will need: • Your AIY Voice Kit running the (or another device running Google Assistant) • a (or a similar web-connected microcontroller, like the ) It's handy to have a breadboard, some hookup wire, and a bright LED, and the examples will show those in action. However, the Photon has an addressable LED built in, so you can use just the Photon itself to test all the code presented here if you prefer. You'll also need accounts with: • (for understanding user voice queries) • (for hosting the webhook webapp/service) • (for deploying your Photon code and communicating with the Particle API) If you're just starting out, or if you're already comfortable with a microservices approach, you can use the 1-firebase-functions example — it's easy to configure and requires no other infrastructure setup. If you'd prefer to run it on a full server environment, or if you plan to build out a larger application from this, use the 2-app-engine example (which can also run on any other server of your choosing). If you've got all those (or similar services/devices) good to go, then we're ready to start!

Getting started Assuming you have all the required devices and accounts as noted above, the first thing you'll want to do is to set up apps on the corresponding services so you can get your devices talking to each other. Local setup First, you'll need to clone this repo, and cd into the newly-created directory. Git clone git@github.com:google/voice-iot-maker-demo.git cd git@github.com:google/voice-iot-maker-demo.git You should see three directories (alongside some additional files): • dialogflow-agent - the contents of the action to deploy on Dialogflow • dialogflow-webhook - a web application to parse the Google Actions/Dialogflow webhook (with server-based and cloud function options) • particle-photon - sample code to flash onto the Particle Photon Once you‘ve taken a look, we’ll move on!

Dialogflow Using the Dialogflow account referenced above, you‘ll want to create a. We'll be setting up a to handle our triggers and send web requests to the Particle API. • Create a new agent (or to begin).

You can name it whatever you like • Select Create a new Google project as well • In the Settings section (click on the gear icon next to your project name) and go to Export and Import • Select Import from zip and upload the zip provided (./dialogflow-agent/voice-iot-maker-demo.zip) You've now imported the basic app shell — take a look at the new ledControl intent (viewable from the Intents tab). You can have a look there now if you're curious, or continue on to fill out the app's details. • Head over to the Integrations tab, and click Google Assistant. • Scroll down to the bottom, and click Update Draft • Go back to the General tab (in Settings), and scroll down to the Google Project details. • Click on the Google Cloud link and check out the project that's been created for you. Feel free to customize this however you like.

Clap your hands then speak, or press Ctrl+C to quit. [2016-12-19 10:41:54,425] INFO:trigger:clap detected [2016-12-19 10:41:54,426] INFO:main:listening. [2016-12-19 10:41:54,427] INFO:main:recognizing. [2016-12-19 10:41:55,048] INFO:oauth2client.client:Refreshing access_token [2016-12-19 10:41:55,899] INFO:speech:endpointer_type: START_OF_SPEECH [2016-12-19 10:41:57,522] INFO:speech:endpointer_type: END_OF_UTTERANCE [2016-12-19 10:41:57,523] INFO:speech:endpointer_type: END_OF_AUDIO [2016-12-19 10:41:57,524] INFO:main:thinking. [2016-12-19 10:41:57,606] INFO:main:command: light on [2016-12-19 10:41:57,614] INFO:main:ready.

Description Google Voice gives you a free phone number for calling, text messaging, and voicemail. It works on smartphones and computers, and syncs across your devices so you can use the app while on the go or at home. You’re in control Forward calls, text messages, and voicemail to any of your devices, and get spam filtered automatically. Block numbers you don’t want to hear from. Backed up and searchable Calls, text messages, and voicemails are stored and backed up to make it easy for you to search your history.

Chat in groups, with photos Send group texts and share photos instantly. Your voicemail, transcribed Google Voice provides free voicemail transcriptions that you can read in the app or have sent to your email. Cheap international calling Make international calls at competitive rates without using your mobile data. Keep in mind: • Google Voice is currently only available in the US • All calls made using Google Voice for iPhone use the standard minutes from your cell phone plan • Calls are placed through a US-based Google Voice access number, which may incur costs (e.g. When traveling internationally).

By Charlieios Almost 12 hours. A new record! Up to 2 stars now, was 1 —— Was hopeful the latest would address the issues, look like no improvement, in fact now it locks up and the app has to be closed. Let’s get the bugs out before new features?

—/ The app fails to notify you if you have a new message about 50% of the time. No sound, nothing. You have to constantly refresh the app to see if anybody’s responded to you. I’ve tried closing the app and also resetting the phone none of which help. If you try to go back and follow your text message conversations they are out of order you can’t tell what reply because with what incoming text message you had.

Appears that the app is cashing your responses as you will see those responses pop up randomly and your text stream. Makes it totally unusable for documentation or even a quick review of what’s been said. App crashes on a regular basis, often several times a day. I’ve been a Google Voice user for years it’s a great application when it works.

—- Can't wait for apple to approve the new version. Will update review the review when they do. ----- Window disappears almost every time you open it. Can't log out due to the settings window crashing. Do not download.

By Mark@Boston Awhile back when you redesigned your app and changed the logo the touch-tone dialing sound was removed, so now when I dial each digit of a number there is no touch-tone audio feedback. It’s very frustrating and causes me to misdial frequently.

Please bring back the touch-tone audio dialing. Also, dialing numbers is hit or miss. Sometimes the number keys acknowledge being pressed and other times not so much or pressing a number key one time results in two or three of the same number. I often find myself hitting the backspace key and attempting to dial the number repeatedly. What has gone wrong with this app. I feel like it has a mind of its own.